207x Filetype PDF File size 0.46 MB Source: repository.unmuhjember.ac.id

1

ARule-Based Approach for Building an Artificial

English-ASL Corpus

Zouhour Tmar and Achraf Othman and Mohamed Jemni, Research Lab. LaTICE, University of Tunis, Tunisia

Abstract—A serious problem facing the Community for re- project mainly in its exploitation step and to encourage its

searchers in the field of sign language is the absence of a large wide use by different communities. In this paper, we review

parallel corpus for signs language. The ASLG-PC12 project our experiences with constructing one such large annotated

proposes a rule-based approach for building big parallel corpus parallel corpus between English written text and American

between English written texts and American Sign Language Sign Language Gloss the ASLG-PC12, a corpus consisting of

Gloss. We present a novel algorithm which transforms an English

part-of-speech sentence to ASL gloss. This project was started over one hundred million pairs of sentences.

in the beginning of 2010, a part of the project WebSign, and The paper is organized as follow. Section 2 presents some

it offers today a corpus containing more than one hundred several projects concerning sign language. In section 3, we

million pairs of sentences between English and ASL gloss. It describe the gloss notation system. After, we define methods

is available online for free in order to develop and design new and pre-processing tasks for collecting data from the Guten-

algorithms and theories for American Sign Language processing,

for example statistical machine translation and any related fields. berg Project [9]. We present two stages of pre-processing, in

In this paper, we present tasks for generating ASL sentences from whicheachsentencehadbeenextracted and tokenized. Section

the corpus Gutenberg Project that contains only English written 4 presents our method and algorithms for constructing the

texts. second part of the corpus in American Sign Language Gloss.

Index Terms—Natural Language Processing, Sign Language, Constructed texts was generated automatically by transforma-

Parallel Corpora. tion rules and then corrected by human experts in ASL.

I. INTRODUCTION Wedescribe also the composition and the size of the corpus.

Discussions and conclusion are drawn in section 5 and 6.

O develop an automatic translator or any tools that

Trequires a learning task for sign languages, the ma- II. BACKGROUND

jor problem is the collection of a parallel corpus between Several projects, concerned with Sign Language, recorded

text and Sign Lan-guage. A parallel corpus is a large and or annotated their own corpora, but only few of them are

structured texts aligned between source and target languages. suitable for automatic Sign Language translation due to the

They are used to do statistical analysis [1] and hypothesis number of available data for learning and processing. The

testing, checking occur-rences or validating linguistic rules European Cultural Heritage Online organization (ECHO) pub-

on a specific universe. Since there is no standard corpus lished corpora for British Sign Language [10] Swedish Sign

and sufficient [2] because the data to develop an automatic Language [11] and the Sign Language of the Netherlands [12].

translation based on statistics, without pre-treatment prior to All of the corpora include several stories signed by a single

the execution of the process of learning require an important signer. The American Sign Language Linguistic Research

volume of data. In many ways, progress in sign language group at Boston University published a corpus in American

research is driven by the availability of data. For these reasons, Sign Language [13]. TV broadcast news for the hearing im-

we started to collect pairs of sentences between English and paired are another source of sign language recordings. Aachen

American Sign Language Gloss [3]. And due to absence of University published a German Sign Language Corpus of the

data especially in ASL and in other side there exist a huge Domain Weather Report [14], [15] published a multimedia

data of English written text; we have developed a corpus corpus in Sign Language for machine Translation. In literature,

based on a collaborative approach where experts contribute to wefoundmanyrelatedprojectsaimingtobuildcorpusforSign

the collection or correction of bilingual corpus or to validate Language. Most of them are based on video recording and we

the automatic translation. This project [4] was started in cannot find textual data toward building translation memory.

2010, as a part of the project WebSign [5] [6] that carries Textual data for Sign Language is not a simple written form,

on developing tools able to make information over the web because signs can contain others information line eye gaze or

accessible for deaf [7] [8]. The main goal of our project is to facial expressions. So, for our corpus, we will use glosses to

develop a Web-based interpreter of Sign Language (SL). This represent Sign Language. In the next section, we will present

tool would enable people who do not know Sign Language a brief description about glosses.

to communicate with deaf individuals. Therefore, contribute

in reducing the language barrier between deaf and hearing III. GLOSSING SIGNS

people. Our secondary objective is to distribute this tool on a

non-profit basis to educators, students, users, and researchers, Stokoe [16] proposed the first annotation system for de-

and to disseminate a call for contribution to support this scribing Sign Language. Before, signs were thought of as

U.S. Government work not protected by U.S. copyright

2

unanalyzed wholes, with no internal structure. The Stokoe

notation system is used for writing American Sign Language

using graphical symbols. After, others notation systems ap-

peared like HamNoSys [17] and SignWriting. Furthermore,

Glosses are used to write signs in textual form. Glossing means

choosing an appropriate English word for signs in order to

write them down. It is not a translating, but, it is similar to

translating. A gloss of a signed story can be a series of English

words, written in small capital letters that correspond to the

signs in ASL story. Some basic conventions used for glossing

are as follows:

• Signs are represented with small capital letters in English.

• Lexicalized finger-spelled words are written in small

capital letters and preceded by the ’♯’ symbol.

• Full finger-spelling is represented by dashes between

small capital letters (for example, A-H-M-E-D).

• Non-manual signals and eye-gaze are represented on a

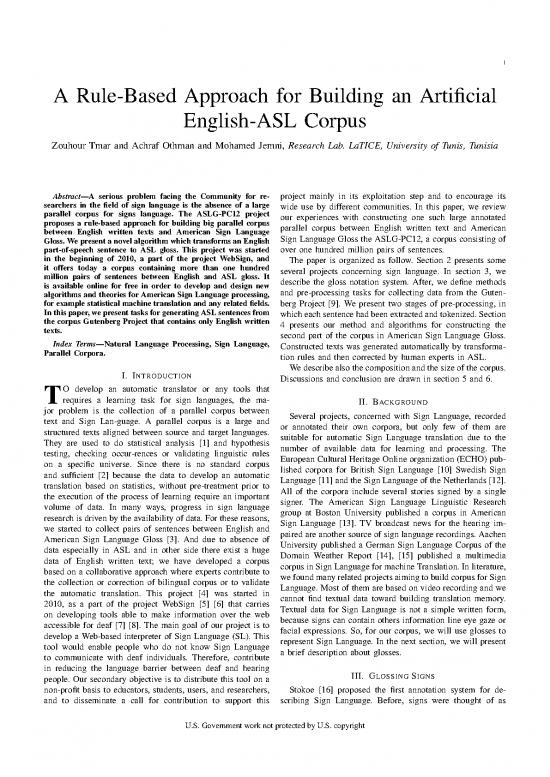

line above the sign glosses. Fig. 1. Occurrence of Zipf’s Law in Gutenberg Corpora of English Texts,

In this work, we use glosses to represent Sign Language. In the the top ten words are (the, I, and, to, of, a, in, that, was, it)

next section, we will describe steps for building our corpus.

IV. PARALLEL CORPUS COLLECTION

A. Collecting data from Gutenberg an available tool for splitting called Splitta. The models are

Acquisition of a parallel corpus for the use in a statistical trained from Wall Street Journal news combined with the

analysis typically takes several pre-processing steps. In our BrownCorpuswhichisintendedtobewidelyrepresentative of

case, there isn’t enough data between English texts and Amer- written English. Error rates on test news data are near 0.25%.

ican Sign Language. We start collecting only English data Also, we use CoreNLP tool . It is a set of natural language

from Gutenberg Project toward transform it to ASL gloss. analysis tools which can take raw English language text input

Gutenberg Project [9] offers over 38K free ebooks and more and give the base forms of words, their parts of speech.

than 100K ebook through their partners. Collecting task is

made in five steps:

• Obtain the raw data (by crawling all files in the FTP

directory).

• Extract only English texts, because there exist ebook in

others languages than English like German, Spanish. We

found also files containing AND sequences.

• Break the text into sentences (sentence splitting task).

• Prepare the corpora (normalization, tokenization).

In the following, we will describe in detail the acquisition

of the Gutenberg corpus from FTP directory. Figure 1 shows

the occurrence of Zipf’s Law in Gutenberg Corpora of English

Texts. We found that words likes (the, I, and, to, of, a, in, that,

was, it) are the top ten used words in corpora. Also this metrics

determines which words are frequently used in English. Also,

a huge work was made to remove non-English texts.

B. Sentence splitting, tokenization, chunking and parsing

Sentence splitting and tokenization require specialized tools

for English texts. One problem of sentence splitting is the

ambiguity of the period ”.” as either an end of sentence

marker, or as a marker for an abbreviation. For English, we

semi-automatically created a list of known abbreviations that

are typically followed by a period. Issues with tokenization Fig. 2. An example of transformation: English input ’What did Bobby buy

include the English merging of words such as in ”can’t” (which yesterday?’

we transform to ”can not”), or the separation of possessive

markers (”the man’s” becomes ”the man ’s”). We use also

3

V. ENGLISH-ASL PARALLEL CORPUS

A. Problematic

As we say in the beginning, the main problem to process

American Sign Language for statistical analysis like statistical

machine translation is the absence of data (corpora or corpus),

especially in Gloss format. By convention, the meaning of a

sign is written correspondence to the language talking to avoid

the complexity of understanding. For example, the phrase ”Do

you like learning sign language?” is glossed as ”LEARN

SIGN YOU LIKE?”. Here, the word ”you” is replaced by

the gloss ”YOU” and the word ”learning” is rated ”LEARN”.

Our machine translate must generate, after learning step, the

sentence in gloss of an English input.

B. Ascertainment and approach

Generally, in research on statistical analysis of sign lan-

guage, the corpus is annotated video sequences. In our case,

we only need a bilingual corpus, the source language is

English and the language is American Sign Language glosses

transcribed. In this study, we started from 880 words (En-

glish and ASL glosses) coupled with transformation rules.

From these rules, we generated a bilingual corpus containing

800 million words. In this corpus, it is not interested in

semantics or types of verbs used in sign language verbs Fig. 3. Steps for building ASL corpora

such as ”agreement” or ”non-agreement”. Figure 2 shows an

example of transformation between written English text and

its generated sentence in ASL. The input is ”What did Bobby

buy yesterday ?” and the target sentence is ”BOBBY BUY

WHAT YESTERDAY ?”. In this example, we save the word

”YESTERDAY”andwecanfoundinsomereference ”PAST”

which indicates the past tense and the action was made in

the past. Also, for the symbol ”?” it can be replaced by

a facial animation with ”WHAT”. For us, we are based on

lemmatization of words. We keep the maximum of information Fig. 4. Size of the American Sign Language Gloss Parallel Corpus 2012

in the sentence toward developing more approaches in these (ASLG-PC12)

corpora. Statistics of corpora are shown in figure 4. The

number of sentences and tokens is huge and building ASL

corpus takes more than one week.

All parts are available to download online for free [4]. Using

transformation rule in figure 5, we build the ASL corpora

following steps shown in figure 3. The input of the system is

English sentences and the output is the ASL transcription in

gloss. In figure 5, only simple rules are shown, we can define

complex rule starting from these simple rules. We can define

a part-of-speech sentence for the two languages. According to

figure 3, when we check if the rule of S exists in database,

the algorithm will return true, in this case, we apply directly

the transformation. Of course, all complex rules must be done

by experts in ASL. Table 5 shows some transformation from Fig. 5. Example of full sentences transformation rules

English sentence to American Sign Language. We present the

transformation rule made by an expert in linguistics.

In 3, we describe steps to transform an English sentence into try to transform the input for each lemma. In some case, we

American Sign Language gloss. The input of the system is the can found that the part-of-speech sentence doesn’t exist in

English sentence. Using CoreNLP tool, we generate an XML the database, so, we transform each lemma. Transformation

file containing morphological information about the sentence rule for lemma is presented in 5. In the last step, we add an

after tokenization task. Then, we build the part-of-speech uppercase script to transform the output. The transformation

sentence and thanks to the transformation rules database, we rule is not a direct transformation for each lemma, it can an

4

alignment of words and can ignore some English words like [6] ——, “An avatar based approach for automatic interpretation of text to

(the, in, a, an, ...). sign language,” in 9th European Conference for the Advancement of the

Assistive Technologies in Europe, San Sebastian (Spain), 3- 5 October,

2007.

C. Transformation Rules [7] ——,“Asystemtomakesignsusingcollaborative approach,” in ICCHP,

Lecture Notes in Computer Science Springer Berlin / Heidelberg,

Not all transformation rules used to transform English data pp.670-677 vol.5105, Linz Austria, 2008.

was verified by experts in linguistics. We validate only 800 [8] M. Jemni, O. E. Ghoul, and N. B. Yahya, “Sign language mms to make

rules and transformation rules for lemma. We cannot validate cell phones accessible to deaf and hard-of-hearing community,” in CVHI,

Euro-Assist Conference and Workshop on Assistive technology for people

all rules because there exist an infinite number of rules. For with Vision and Hearing impairments, Granada, Spain, 2007.

this reason, we developed an application that offer to experts to [9] Project, “Gutenberg,” 2012. [Online]. Available:

enter their rules from an English sentence, without coding. The http://www.gutenberg.org/

[10] B. Woll, R. Sutton-Spence, and D. Waters, “Echo data set for british

application is just a simple user interface which contain lemma sign language (bsl),” in Department of Language and Communication

transformation rule, and the expert will compose lemma. After Science,City University (London), 2004.

that, he save the result and rebuild the corpora. The built [11] B. Bergman and J. Mesch, “Echo data set for swedish sign language

(ssl),” in Department of Linguistics, University of Stockholm, 2004.

corpus is a made by a collaborative approach and validated [12] O. Crasborn, E. Kooij, A. Nonhebel, and W. Emmerik, “Echo data

by experts. set for sign language of the netherlands (ngt),” in Department of

Linguistics,Radboud University Nijmegen, 2004.

[13] V. Athitsos, C. Neidle, S. Sclaroff, J. Nash, R. Stefan, A. Thangali,

D. Releases of the English-ASL Corpus H. Wang, and Q. Yuan, “Large lexicon project: American sign language

video corpus and sign language indexing/retrieval algorithms,” in Pro-

The initial release of this corpus consisted of data up to ceedings of the 4th Workshop on the Representation and Processing

September 2011. The second release added data up to January of Sign Languages:Corpora and Sign Language Technologies, LREC,

2010.

2012, increasing the size from just over 800 sentences to up to [14] J. Bungeroth, D. Stein, M. Zahedi, and H. Ney, “A german sign language

800 million words in English. A forthcoming third release will corpus of the domain weather report,” in In 5th international, 2006,

include data up to early 2013 and will have better tokenization p. 29.

[15] S. Morrissey, H. Somers, R. Smith, S. Gilchrist, and I. D, “4th workshop

and more words in American Sign Language. For more details, on the representation and processing of sign languages: Corpora and

please check the website [4]. sign language technologies building a sign language corpus for use in

machine translation,” 2010.

[16] W. C. Stokoe, “10th anniversary classics sign language structure: An

VI. DISCUSSION AND CONCLUSION outline of the visual communication systems of the american deaf,”

1960.

Wedescribedtheconstruction of the English-American Sign [17] S. Prillwitz and H. Zienert, “Hamburg notation system for sign language:

Language corpus. We illustrate a novel method for transform- Development of a sign writing with computer application,” in Current

ing an English written text to American Sign Language gloss. trends in European sign language research: Proceedings of the 3rd

European Congress on Sign Language Research, 1990.

This corpus will be useful for statistical analysis for ASL [18] M. boulares and M. Jemni, “Mobile sign language translation system

or any related fields [18] [19]. We present the first corpus for deaf community,” in W4A ACM,9th International Cross-Disciplinary

for ASL gloss that exceeds one hundred million of sentences Conference on Web Accessibility, Lyon, France, 2012.

[19] K. Jaballah and M. Jemni, “Toward automatic sign language recognition

available for all researches and linguistics. During the next from web3d based scenes,” in ICCHP, Lecture Notes in Computer

phase of the ASLG-PC12 project, we expect to provide both a Science Springer Berlin / Heidelberg, pp.205-212 vol.6190, Vienna

richer analysis of the existing corpus and others parallel corpus Austria, 2010.

(like French Sign Language, Arabic Sign Language, etc.).

This will be done by first enriching the rules through experts.

Enrichment will be achieved by automatically transforming

the current transformation rules database, and then validating

the results by hand.

REFERENCES

[1] A. Othman and M. Jemni, “Statistical sign language machine translation:

from english written text to american sign language gloss,” in IJCSI

International Journal of Computer Science Issues, Vol. 8, Issue 5, No

3, 2011.

[2] M. Sara and W. Andy, “Joining hands: Developing a sign language

machine translation system with and for the deaf community,” in Pro-

ceedings of the Conference and Workshop on Assistive Technologies for

People with Vision and Hearing Impairments (CVHI-2007), Granada,

Spain, 28th - 31th August, 2007, vol. 415, 2007.

[3] A. Othman and M. Jemni, “Englishasl gloss parallel corpus 2012:

Aslgpc12,” in 5th Workshop on the Representation and Processing of

Sign Languages: Interactions between Corpus and Lexicon, Istanbul,

Turkey, 2012.

[4] A. Othman, “American sign language gloss parallel corpus 2012 (aslg-

pc12),” 2012. [Online]. Available: http://www.achrafothman.net/aslsmt/

[5] M. Jemni and O. E. Ghoul, “Towards web-based automatic interpretation

of written text to sign language,” in First International conference on

ICT and Accessibility (ICTA-2007), Hammamet, Tunisia, April,, 2007.

no reviews yet

Please Login to review.